I tend to take a lot of somewhat random photos of things that interest me. If it’s a good photo and the mood strikes, I’ll share it on various social media channels.

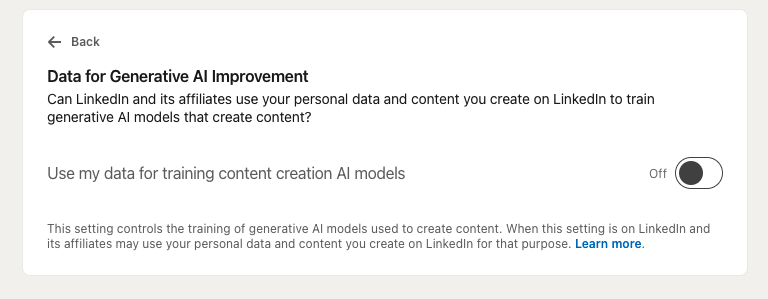

One image I recently shared featured a stack of “Wooden Bitcoin” with a joke about their value (there are certain scenarios where the wooden ones are quite valuable).

When I shared the image on social media (and when I embedded it above), I included an image description for accessibility purposes.

A small pile of wooden disks on a silver background. An outer circle on each disk reads “Wooden Bitcoin. Just as Good, Really.” Text on an inner circle reads “To Redeem: Insert into any Internet Coin Slot.” The Bitcoin logo appears at the center of each disk.

That description wasn’t very hard to come up with, but sometimes, it’s a bit more difficult to convey all the visual details. For example, consider this image of winterberries.

In the embedded image’s alt text I’ve described it as “Bright red winterberries on bare branches” which is fairly accurate, though it doesn’t offer a lot of detail.

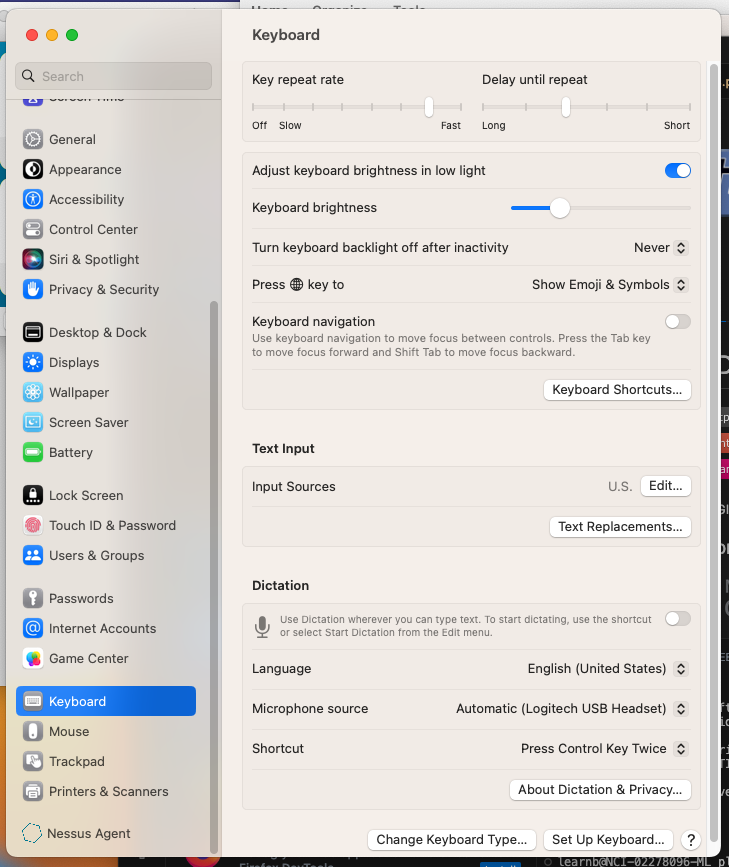

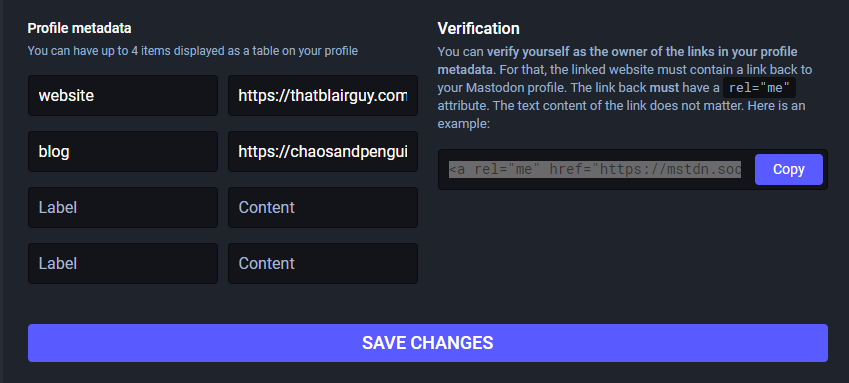

Much like everyone else, I’ve been getting my feet wet with AI. Lately, I’ve been using Ollama to run models locally for various toy projects; and more recently, I noticed that Meta’s llama3.2-vision model had become available, allowing the ability to work with image files.

Uploading the winterberry image, I gave llama the prompt:

Please describe this image.

What I got back was quite a bit more detailed than what I’d come up with on my own.

This image presents a close-up view of a vibrant red berry bush, showcasing its intricate details.

The bush’s branches are bare and grayish-brown in color, with numerous small twigs extending from the main stems. The berries themselves are bright red, spherical in shape, and densely packed along the branches. They appear to be ripe, as they have lost their green hue and turned a deep crimson color.

In the background, other bushes or plants can be seen, although they are not clearly visible due to the dense foliage of the berry bush. The overall atmosphere suggests that this image was captured during the winter season, likely in December or January, given the bare branches and ripe berries.

That description pretty well knocks it out of the park! And although the image contains no Exif data, the AI correctly determined that the photo was taken “…in December or January” (December 31 in fact, right on the cusp of the change).

For comparison, I uploaded the same image to ChatGPT, using the current “GPT-4o mini” model. Entering the same prompt, I got a similarly detailed, albeit shorter, description.

This image shows branches covered with clusters of bright red berries. The berries are small, smooth, and shiny, growing densely along the thin, leafless twigs. These are likely the fruits of a holly plant, possibly winterberry (Ilex verticillata), which is known for its vibrant red berries that remain on the branches during winter, adding color to the landscape. The background is blurred, emphasizing the striking red berries in the foreground.

Again, that’s a great description. No guesses on when the photo was taken, but this time, ChatGPT correctly recognized that these were winterberries.

In the case of llama 3.2, I’d likely remove the guess about when the photo was taken since I know that information. For ChatGPT, I’d take out the guess work about the type of berries since I already know it. But to be honest, either of these outputs would be good starting points for describing the photo.

So, what about that first photo with the wooden bitcoins?

Here’s the llama output. It’s wordy and repeats some the details multiple times. And I disagree with its description of the Bitcoin logo as the curved lines form the letter “B” and not a circle.

The image shows a collection of wooden coins with the Bitcoin logo and text on them, likely used as tokens or rewards in an online game or platform.

The coins are made of wood and have a circular shape.

They feature the Bitcoin logo in the center, which is a stylized letter “B” made up of two curved lines that form a circle.

The text on the coins reads “WOODEN BITCOIN” at the top and “JUST AS GOOD, REALLY” at the bottom.

There are 15 coins visible in the image, arranged randomly on a gray surface.

Some of the coins overlap each other, creating a sense of depth and layering.

The background is a plain gray color, which helps to focus attention on the coins.

Overall, the image suggests that these wooden coins are being used as a novelty item or collectible, perhaps in conjunction with an online game or platform that rewards players with virtual currency.

Chat GPT did better on this one.

This image shows a pile of wooden tokens designed to resemble bitcoins. Each token is circular and engraved with text and imagery related to Bitcoin. The engraving includes the Bitcoin logo (a “B” with two vertical lines) and phrases such as “Wooden Bitcoin,” “Just As Good, Really,” and “To Redeem: Insert into any internet coin slot.” The tokens are humorously crafted to mimic physical representations of digital currency.

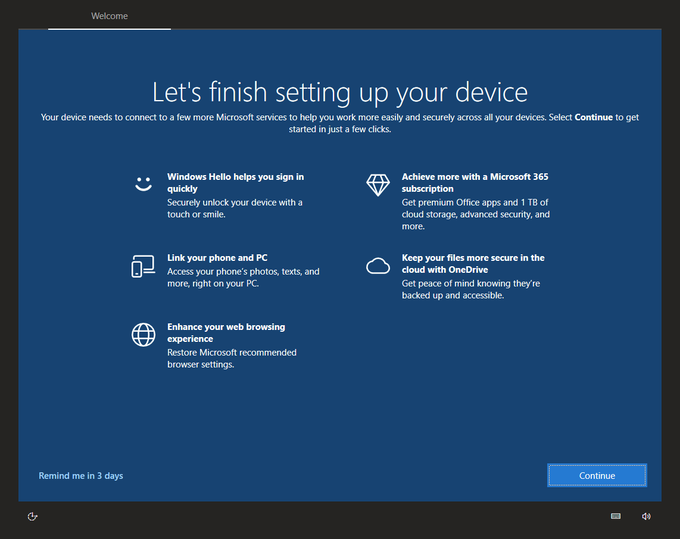

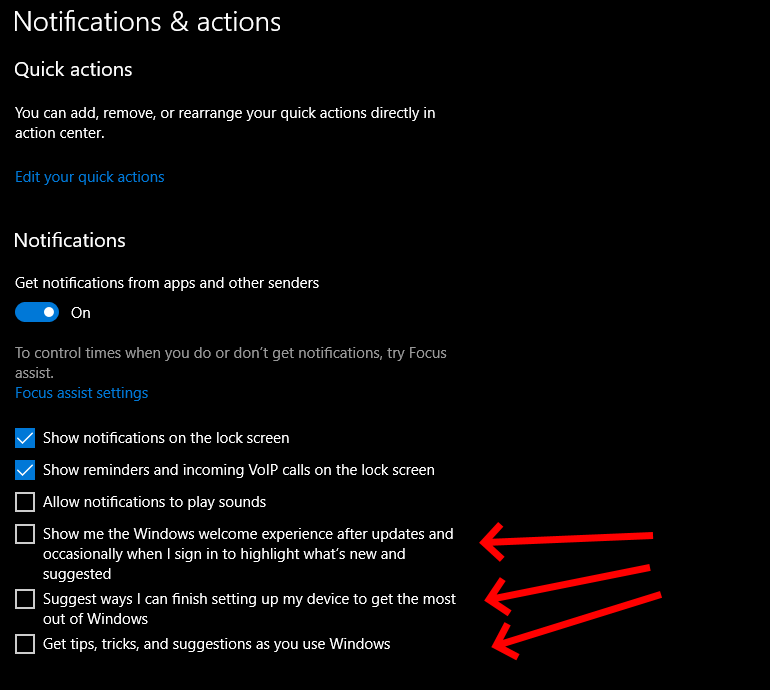

In this case, Chat GPT clearly did the better job, and both tools did a credible job of recognizing text presented in an unusual format, but I’m not sure I’d want to trust either one to generate text without reviewing it before sending the text into the world.

This is a great step toward solving the “blank page problem”, most likely giving a solid starting point toward a description of the image (it’s also a huge step up from xkcd’s “Tasks” cartoon).

At the moment, my phone’s camera roll has nearly 10,000 photos on it (I really do take a lot of somewhat random photo). Being able to run them all through AI has the potential to save a lot of tedium with creating descriptions. But, along with the need to double-check the descriptions, I’m also still on the the hook for deciding whether the image is even worth sharing.

Using an AI tool definitely has potential to save me some time. But it’s not a panacea.