I have a few old sites I created in WordPress but no longer update aside from installing newer versions of WordPress. I don’t want to run out of date software, but I also don’t want to take down the content.

It’s been a decade since Fanboy’s Convention List was last updated, but there are blog posts with well established URLs. (Besides, I still have dreams of one day reviving the site.)

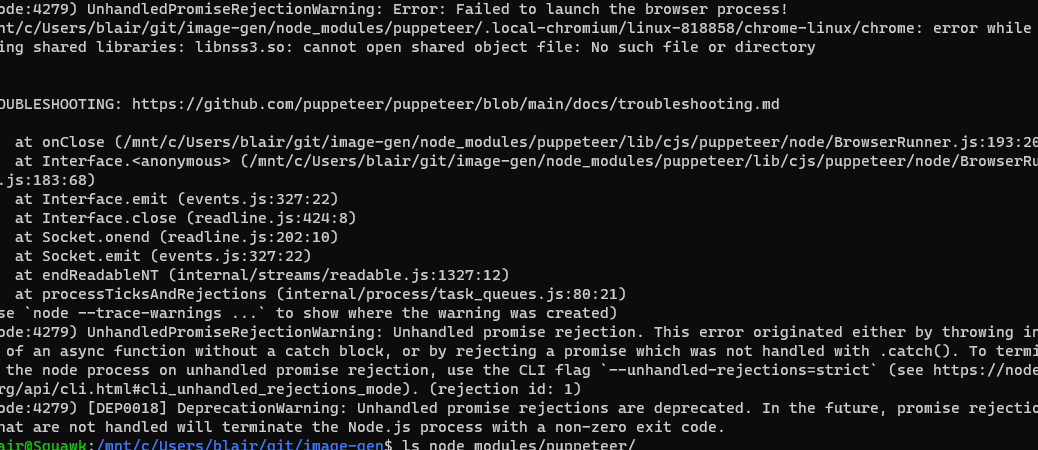

My solution is to first, create backups of everything, and then spider the site, capture all the generated HTML and put the static pages back to replace them.

I’ve known about GNU Wget for just about forever, but only as an alternative to curl. What I discovered is that Wget has a —mirror option which allows you to download the entire site. It has a lot of options you’ll want to look into (so do look at the docs) but what I finally settled on for my purposes was

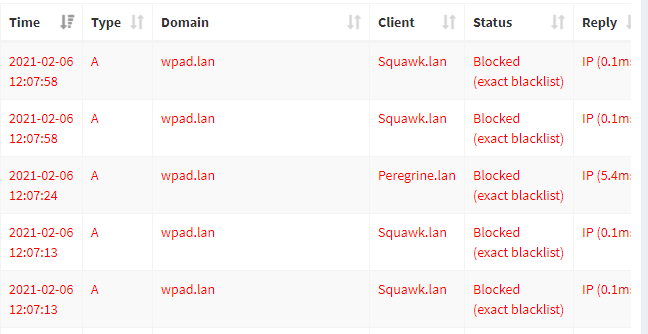

wget --mirror --page-requisites --wait=2 https://www.fanboyslist.comNote: The --wait=2 makes Wget wait two seconds between requests. If you’re using this to mirror someone else’s site, consider using a higher value in order to avoid overloading their server. Badly behaved spiders can wreak havoc on sites with dynamically generated pages and may be blocked as a result.

Fun fact: although you may associate Wget with Linux, it’s also available for Windows. There are some differences in what characters are used for outputting file names, it should otherwise work the same way.

On the first pass, instead of directories mirroring the site structure, there were a bunch of files with names like index.html?p=257 (on Windows, this would show up as index.html%3Dp=257). Turns out that at some point, the site’s permalinks were turned off and WordPress had reverted to query string parameters.

Fix the permalinks, make sure categories and tags will have names instead of parameters.

The next pass has the directory structure, but still had the files with query strings. Digging in a bit, WordPress is generating “shortlinks” in the form

https://www.fanboyslist.com/blog/?p=257Shortlinks are a microformat, meant to provide a shorter link for when you’re manually typing the URL. But this site doesn’t provide a means for manually discovering them, and I’m trying to remove the mechanism for resolving them, so that’s not needed in the static page (for my purposes, the canonical URL is much more useful).

One Google search later, I found a comment on a support thread about disabling shortlinks. In a nutshell, add this line to the end of the theme’s functions.php file:

remove_action('wp_head', 'wp_shortlink_wp_head', 10, 0);(Note: I’m trying to remove this entire WordPress installation, so I’m going to modify the theme’s file. On an installation you were planning to keep, this should go in a child theme.)

While we’re fiddling with functions.php, remove the headers for the REST API, from https://wordpress.stackexchange.com/a/211469

remove_action( 'wp_head', 'rest_output_link_wp_head' );

remove_action( 'wp_head', 'wp_oembed_add_discovery_links' );

remove_action( 'template_redirect', 'rest_output_link_header', 11 );Next, let’s get rid of the bit where the site is loading support for emoji (this site predates most US use of emoji). Here’s a nice little snippet from: https://www.netmagik.com/how-to-disable-emojis-in-wordpress/

/**

* Disable the emoji's

*/

function disable_emojis() {

remove_action( 'wp_head', 'print_emoji_detection_script', 7 );

remove_action( 'admin_print_scripts', 'print_emoji_detection_script' );

remove_action( 'wp_print_styles', 'print_emoji_styles' );

remove_action( 'admin_print_styles', 'print_emoji_styles' );

remove_filter( 'the_content_feed', 'wp_staticize_emoji' );

remove_filter( 'comment_text_rss', 'wp_staticize_emoji' );

remove_filter( 'wp_mail', 'wp_staticize_emoji_for_email' );

// Remove from TinyMCE

add_filter( 'tiny_mce_plugins', 'disable_emojis_tinymce' );

}

add_action( 'init', 'disable_emojis' );

/**

* Filter out the tinymce emoji plugin.

*/

function disable_emojis_tinymce( $plugins ) {

if ( is_array( $plugins ) ) {

return array_diff( $plugins, array( 'wpemoji' ) );

} else {

return array();

}

}Remove the individual RSS feeds for each post’s comments

add_filter( 'feed_links_show_comments_feed', '__return_false' );And then, a pile of other things to remove comes from this answer on Stack Overflow.

remove_action( 'wp_head', 'feed_links_extra', 3 ); // Display the links to the extra feeds such as category feeds

remove_action( 'wp_head', 'feed_links', 2 ); // Display the links to the general feeds: Post and Comment Feed

remove_action( 'wp_head', 'rsd_link' ); // Display the link to the Really Simple Discovery service endpoint, EditURI link

remove_action( 'wp_head', 'wlwmanifest_link' ); // Display the link to the Windows Live Writer manifest file.

remove_action( 'wp_head', 'index_rel_link' ); // index link

remove_action( 'wp_head', 'parent_post_rel_link', 10, 0 ); // prev link

remove_action( 'wp_head', 'start_post_rel_link', 10, 0 ); // start link

remove_action( 'wp_head', 'adjacent_posts_rel_link', 10, 0 ); // Display relational links for the posts adjacent to the current post.

And then, one item that isn’t in functions.php, Get rid off all the <link rel="pingback".... lines by installing the bye-bye-pingback plugin.

The theme was pretty old, based on Kubrik from around 2009 and some of the changes actually needed to be done via changes to theme files (e.g. remove the blog’s overall RSS feed), but with all these changes in place, I can now run Wget one last time and get a clean copy of the blog.